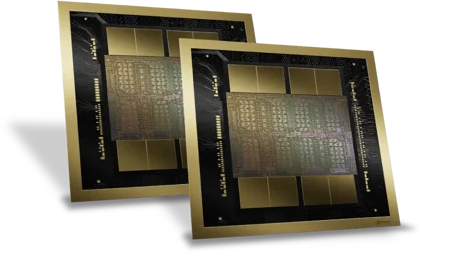

NVIDIA® B200

NVIDIA's latest innovative Blackwell architecture will redefine AI and HPC with unparalleled parallel computing power.

The NVIDIA B200, powered by Blackwell architecture, is the world's most powerful GPU for AI and HPC, delivering leading-edge performance with 8 interconnected GPUs and a multi-chipset design for unparalleled efficiency.

With B200 SXM you get:

The NVIDIA B200 accelerates AI inference workloads, such as image and speech recognition, with its powerful Tensor Cores, enabling fast processing of large data sets for real-time applications.

The NVIDIA B200 massive memory and processing power accelerate deep learning, reducing training and deployment time for complex models and enabling training on larger datasets.

Powered by the NVIDIA Blackwell architecture’s advancements in computing, NVIDIA B200 delivers 3X the training performance and 15X the inference performance of DGX H100.

| Form Factor | B200 |

|---|---|

| GPU | 8x NVIDIA Blackwell GPUs |

| GPU Memory | 1,440GB total GPU memory |

| Performance | 72 petaFLOPS training and 144 petaFLOPS inference |

| Power Consumption | ~14.3kW max |

| CPU |

2 Intel® Xeon® Platinum 8570 Processors 112 Cores total, 2.1 GHz (Base), 4 GHz (Max Boost) |

| System Memory | Up to 4TB |

| Networking |

4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI 2x dual-port QSFP112 NVIDIA BlueField-3 DPU |

| Management Network | 10Gb/s onboard NIC with RJ45 100Gb/s dual-port ethernet NIC Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2 Internal storage: 8x 3.84TB NVMe U.2 |

| Software |

NVIDIA AI Enterprise: Optimized AI Software NVIDIA Base Command™: Orchestration, Scheduling, and Cluster Management DGX OS / Ubuntu: Operating system |

| Rack Units (RU) | 10 RU |

| System Dimensions | Height: 17.5in (444mm) Width: 19.0in (482.2mm) Length: 35.3in (897.1mm) |

| Operating Temperature | 5–30°C (41–86°F) |

| Enterprise Support |

Three-year Enterprise Business-Standard Support for hardware and software 24/7 Enterprise Support portal access Live agent support during local business hours |