AI models are becoming increasingly complex. Training these models requires massive computational power, as well as flexible capabilities to scale. NVIDIA A100 Tensor Cores with Tensor Float (TF32) provide up to 20x the performance compared to NVIDIA Volta without code changes, and an additional 2x increased efficiency with automatically mixed precision and FP16.

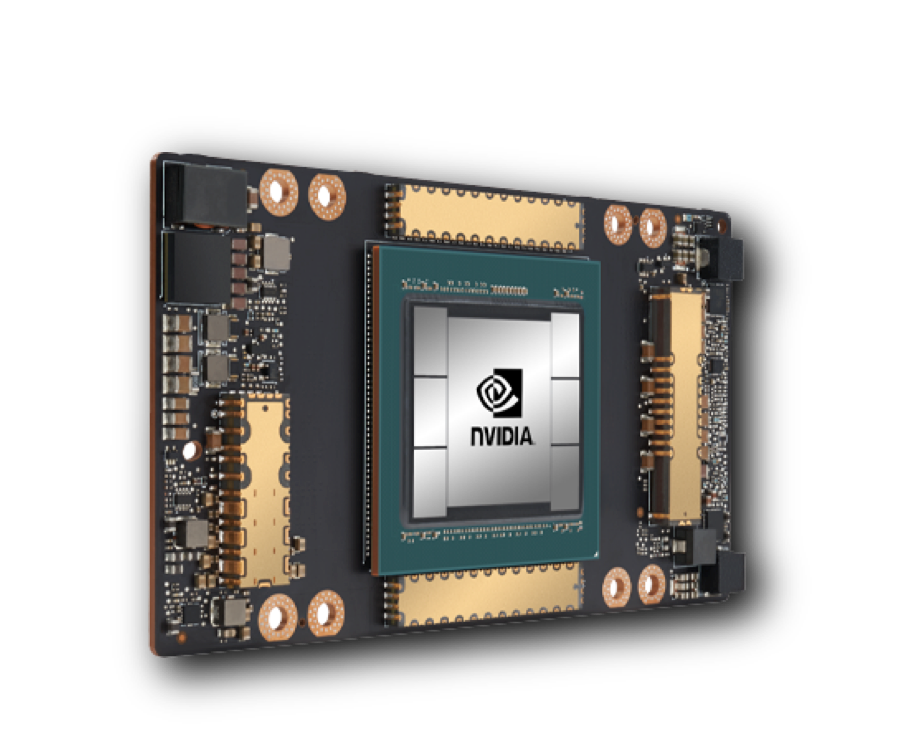

NVIDIA® A100

Tensor Core GPU

Unprecedented acceleration at every scale.